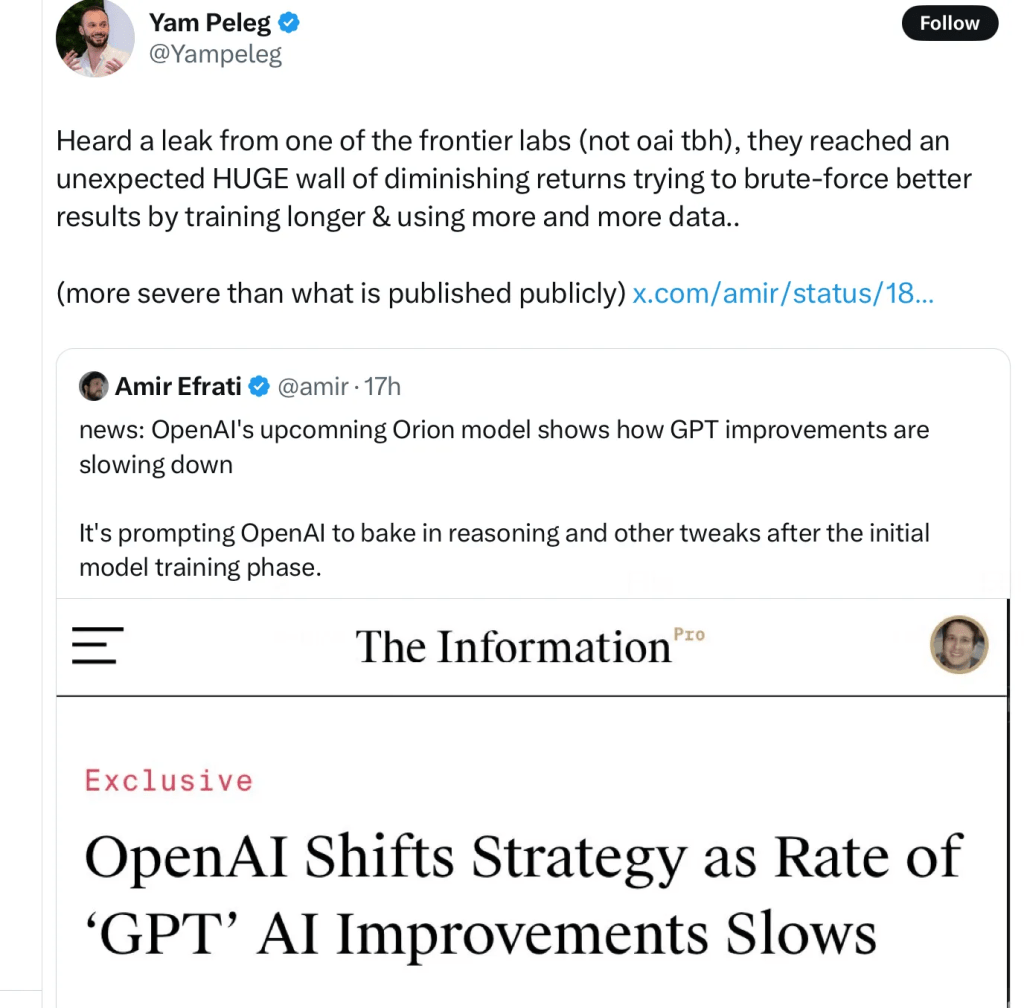

Just adding data and compute and training longer worked miracles for a while, but those days may well be over.

https://garymarcus.substack.com/p/an-ai-rumor-you-wont-want-to-miss

The thing is, in the long term, science isn’t majority rule. In the end, the truth generally outs. Alchemy had a good run, but it got replaced by chemistry. The truth is that scaling is running out, and that truth is, at last coming out.

https://garymarcus.substack.com/p/confirmed-llms-have-indeed-reached?r=8tdk6

Artificial intelligence companies like OpenAI are seeking to overcome unexpected delays and challenges in the pursuit of ever-bigger large language models by developing training techniques that use more human-like ways for algorithms to “think”.

LLMs will remain very powerful tools. They will not become AGIs (there is a WHOLE BODY missing for that to happen). They will not destroy the world (we have corn-chip eating, beer swilling idiots who are much better at that). The sooner we stop having to watch Sam Altman beat off to his fantasies in public, the better we will all be.

Leave a comment